In this part of the long-running series breaking down NIST Secure Software Development Framework (SSDF), also known as the standard NIST 800-218, we are going to discuss PW 6. This control is broken into two parts, PW.6.1 and PW.6.2. These two controls are related and defined as:

PW.6.1: Use compiler, interpreter, and build tools that offer features to improve executable security.

PW.6.2: Determine which compiler, interpreter, and build tool features should be used and how each should be configured, then implement and use the approved configurations.

We’re going to lump both of these together for the purpose of this post. It doesn’t make sense to split these two controls apart when we are reviewing what this actually means, but there will be two posts for PW.6, this is part one. Let’s start by looking at the examples for some hints on what the standard is looking for:

PW.6.1

Example 1: Use up-to-date versions of compiler, interpreter, and build tools.

Example 2: Follow change management processes when deploying or updating compiler, interpreter, and build tools, and audit all unexpected changes to tools.

Example 3: Regularly validate the authenticity and integrity of compiler, interpreter, and build tools. See PO.3.

PW.6.2

Example 1: Enable compiler features that produce warnings for poorly secured code during the compilation process.

Example 2: Implement the “clean build” concept, where all compiler warnings are treated as errors and eliminated except those determined to be false positives or irrelevant.

Example 3: Perform all builds in a dedicated, highly controlled build environment.

Example 4: Enable compiler features that randomize or obfuscate execution characteristics, such as memory location usage, that would otherwise be predictable and thus potentially exploitable.

Example 5: Test to ensure that the features are working as expected and are not inadvertently causing any operational issues or other problems.

Example 6: Continuously verify that the approved configurations are being used.

Example 7: Make the approved tool configurations available as configuration-as-code so developers can readily use them.

If we review the references, you will find there’s a massive swath of suggestions. Everything from code signing to obfuscating binaries, to handling compiler warnings, to threat modeling. The net was cast wide on this one. Every environment is different. Every project or product uses its own technology. There’s no way to “one size fits all” this control. This is one of the challenges that has made compliance for developers so very difficult in the past. We have to determine how this applies to our environment, and the way we apply this finding will be drastically different than the way someone else applies it.

We’re going to split this topic along the lines of build environments and compiler/interpreter security. For this blog, we are going to focus on using modern protection technology, specifically in compiler security and runtimes. Of course, you will have to review the guidance and understand what makes sense for your environment, everything we discuss here is for example purposes only.

Compiler security

When we think about the security of applications, we tend to focus on the code itself. Security vulnerabilities are the result of attackers causing unexpected behavior in the code. Printing an unescaped string, adding or subtracting a very large integer. Maybe even getting the application to open a file it shouldn’t. We’ve all heard about memory safety problems and how hard they are to avoid in certain languages. C and C++ are legendary for their lack of memory protection. Our intent should be to write code that doesn’t have security vulnerabilities. The NSA and even Consumer Reports have recently come out against using memory unsafe languages. We can also lean on technology to help reduce the severity of memory safety bugs when we can’t abandon memory unsafe languages just yet, maybe never. There’s still a lot of COBOL out there, after all.

While attackers can exploit some bugs in ways that cause unexpected behavior, there are technologies, especially in compilers, that can lower the severity or even eliminate the danger of certain bug classes. For example, stack buffer overflows in C used to be a huge problem, then we created stack canaries which has reduced the severity of these bugs substantially.

Every compiler is different, every operating system is different, and every application is different, so all of this has to be decided for each individual application. For the purposes of simplicity, we will use gcc to show how some of these technologies work and how to enable them. The Debian Wiki Hardening page has a huge amount of detail, we’ll just cover some of the quick easy things.

user@debian:~/test$

user@debian:~/test$ gcc -o overflow test-overflow.c

root@debian:~/test$ ./overflow

Segmentation fault

user@debian:~/test$ gcc -fstack-protector -o overflow test-overflow.c

user@debian:~/test$ ./overflow

*** stack smashing detected ***: terminated

Aborted

user@debian:~/test$In the above example, we can see how the compiler can issue a warning instead of crashing if we enable the gcc stack protector feature.

Most of these protections will only reduce the severity of a very narrow group of bugs. These languages still have many other problems and moving away from a memory unsafe language is the best path forward. Not everyone can move to a memory safe language, so compiler flags can help.

Compiler warnings are bugs

There was once a time when compiler warnings were ignored because they were just warnings. It didn’t really matter, or so we thought. Compiler warnings were just suggestions from the compiler, if there’s time later those warnings can be fixed. Except there is never time later. It turns out that sometimes those warnings are really important. They can be hints that a serious bug is waiting to be exploited. It’s hard to know which warnings are harmless and which are serious, so the current best practice is to fix them all to minimize vulnerabilities in your code.

If we use our example code, we can see:

user@debian:~/test$

user@debian:~/test$ gcc -o overflow test-overflow.c

test-overflow.c: In function 'function':

test-overflow.c:6:2: warning: '__builtin_memcpy' writing 24 bytes into a region of size 9 overflows the destination [-Wstringop-overflow=]

6 | strcpy(s, "This string is too long");

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

user@debian:~/test$We see a warning telling us our string is too long. The build doesn’t fail, but that’s not a warning you should ignore.

Interpreted languages

The suggestion in the SSDF is for interpreted languages is to use the latest interpreter. These languages are memory safe, but they are still vulnerable to logic bugs. Many of the interpreters are written in C or C++, so you could double check they are built with the various compiler hardening features enabled.

There aren’t often protections built into the interpreter itself. This goes back to the wide swath of guidance for this control. Programming languages have an infinite number of possible use cases, the problem set is too large to accurately protect. Memory safety is a very narrow set of problems that we still can’t get right. General purpose programming is an infinitely wide set of problems.

There were some attempts to secure interpreted languages in the past, but the hardening proved to be too easy to break to rely on as a security feature. PHP and Ruby used to have safe mode, but it turned out they weren’t actually safe. Compiler and interpreter protections are hard to make effective in meaningful ways.

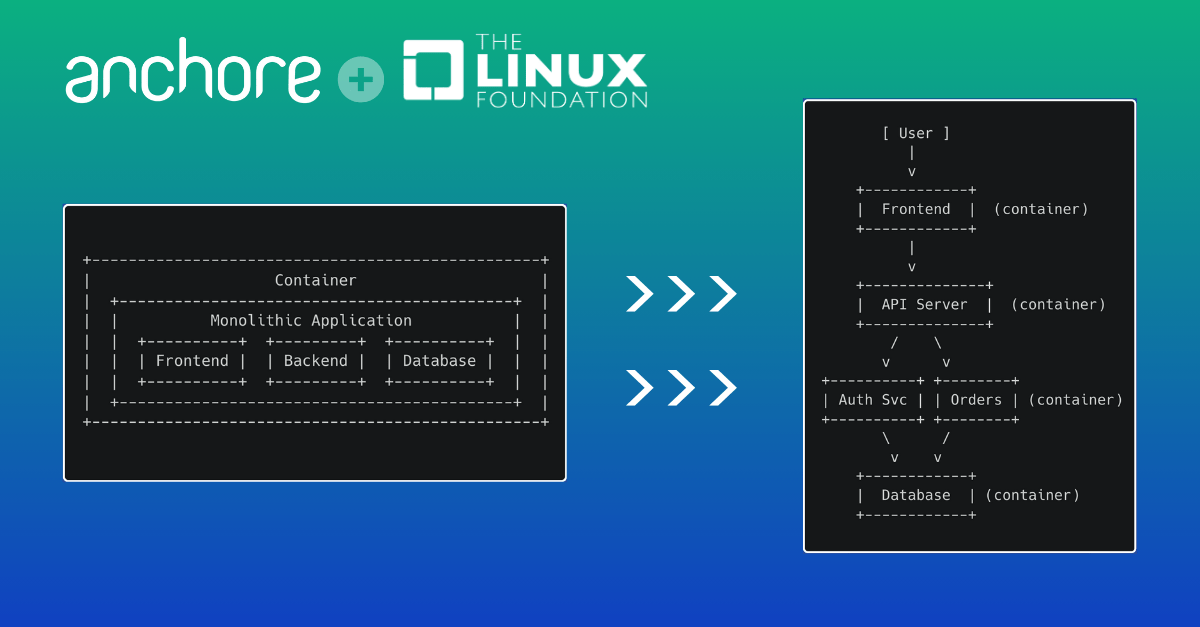

The best way to secure interpreted languages is to run code in sandboxes using things like virtualization and containers. Such guidance won’t be covered in this post. In fact SSDF doesn’t have guidance on how to run applications securely, SSDF focuses on development. There is plenty of other guidance on that, we’ll make sure to cover those once the SSDF series is complete.

This complexity and difficulty are almost certainly why the SSDF guidance is to just run the latest interpreter. The latest interpreter version will ensure any bugs, security or otherwise are fixed.

Wrapping up

As we can see from this post, optimizing compiler and runtime security isn’t a simple task. It’s one of those things can can feel easy, but it’s really not. The devil is in the details. The only real guidance here is to figure out what works best in your environment and go with that.

If you missed the first post in this series, you can view it here. Next time we will discuss build systems. Build systems have been a popular topic over the last few years as they have been targets for attackers. Luckily for us there is some solid guidance we can draw upon for securing a build system.

Josh Bressers

Josh Bressers is vice president of security at Anchore where he guides security feature development for the company’s commercial and open source solutions. He serves on the Open Source Security Foundation technical advisory council and is a co-founder of the Global Security Database project, which is a Cloud Security Alliance working group that is defining the future of security vulnerability identifiers.