When Docker was first introduced, Docker container security best practices primarily consisted of scanning Docker container images for vulnerabilities. Now that container use is widespread and container orchestration platforms have matured, a much more comprehensive approach to security is standard practice.

This post covers best practices for three foundational pillars of Docker container security and the best practices within each pillar:

- Securing the Host OS

- Securing the Container Images

- Continuous Approach

- Image Vulnerabilities

- Policy Enforcement

- Create a User for the Container Image

- Use Trusted Base Images for Container Images

- Do Not Install Unnecessary Packages in the Container

- Add the HEALTHCHECK Instruction to the Container Image

- Do Not Use Update Instructions Alone in the Dockerfile

- Use COPY Instead of ADD When Writing Dockerfiles

- Do Not Store Secrets in Dockerfiles

- Only Install Verified Packages in Containers

- Securing the Container Runtime

- Consider AppArmor and Docker

- Consider SELinux and Docker

- Seccomp and Docker

- Do Not Use Privileged Containers

- Do Not Expose Unused Ports

- Do Not Run SSH Within Containers

- Do Not Share the Host’s Network Namespace

- Manage Memory and CPU Usage of Containers

- Set On-Failure Container Restart Policy

- Mount Containers’ Root Filesystems as Read-Only

- Vulnerabilities in Running Containers

- Unbounded Network Access from Containers

What Are Containers?

Containers are a method of operating system virtualization that enable you to run an application and its dependencies in resource-isolated processes. These isolated processes can run on a single host without visibility into each others’ processes, files, and network. Typically each container instance provides a single service or discrete functionality (called a microservice) that constitutes one component of the application.

Containers, themselves, are immutable, which means that any changes made to a running container instance will be made on the container image and then deployed. This capability allows for more streamlined development and a higher degree of confidence when deploying containerized applications.

Securing the Host Operating System

Container security starts at the infrastructure layer and is only as strong as this layer. If attackers compromise the host operating system (OS), they may compromise all processes on the OS, including the container runtime. For the most secure infrastructure, you should design the base OS to run the container engine only, with no other processes that could be compromised.

For the vast majority of container users, the preferred host operating system is a Linux distribution. Using a container-specific host OS to reduce the surface area for attack is generally a best practice. Modern container platforms like Red Hat OpenShift run on Red Hat Enterprise Linux CoreOS, which is hardened with SELinux and offers process, network, and storage separation. To further strengthen the infrastructure layer of your container stack and improve your overall security posture, you should always keep the host operating system patched and updated.

Best Practices for Securing the Host OS

The following list outlines some best practices to consider when securing the host OS:

1. Choosing an OS

If you are running containers on a general-purpose operating system, you should instead consider using a container-specific operating system because they typically include by default such security features as enabled SELinux, automated updates, and image hardening. Bottlerocket from AWS is one such OS designed for hosting containers that is free, open source, and Linux based.

With a general-purpose OS, you will need to manage every security feature independently. Hosts that run containers should not run any unnecessary system services or non-containerized applications. And you should consistently scan and monitor your host operating system for vulnerabilities. If you find vulnerabilities, apply patches and update the OS.

2. OS Vulnerabilities and Updates

Once you choose an operating system, it’s important to standardize on best practices and tooling to validate the versioning of packages and components contained within the base OS. Note that if you choose to use a container-specific OS, it will contain components that may become vulnerable and require remediation. You should use tools provided by the OS vendor or other trusted organizations to regularly scan and check for updates to components.

Even though security vulnerabilities may not be present in a particular OS package, you should update components if the vendor recommends an update. If it’s simpler for you to redeploy an up-to-date OS, that is also an option. With containerized applications, the host should remain immutable in the same manner containers should be. You should not be persisting data uniquely within the OS. Following this best practice will greatly reduce the attack surface and avoid drift. Lastly, container runtime engines such as Docker frequently update their software with fixes and features. You can mitigate vulnerabilities by applying the latest updates.

3. User Access Rights

All authentication directly to the OS should be audited and logged. You should only grant access to the appropriate users and use keys for remote logins. And you should implement firewalls and allow access only on trusted networks. You should also implement a robust log monitoring and management process that terminates in a dedicated log storage host with restricted access.

Additionally, the Docker daemon requires ‘root’ privileges. You must explicitly add a user to the ‘docker’ group to grant that user access rights. Remove any users from the ‘docker’ group who are not trusted or do not need privileges.

4. Host File System

Make sure containers are run with the minimal required set of file system permissions. Containers should not be able to mount sensitive directories on a host’s file system, especially when they contain configuration settings for the OS. This is a bad practice that you should avoid because an attacker would be able to execute any command that the Docker service can run and potentially gain access to the entire host system because the Docker service runs as root.

5. Audit Considerations for Docker Runtime Environments

You should conduct audits on the following:

- Container daemon activities

- These files and directories:

- /var/lib/docker

- /etc/docker

- docker.service

- docker.socket

- /etc/default/docker

- /etc/docker/daemon.json

- /usr/bin/docker-containerd

- /usr/bin/docker-runc

Securing Docker Images

You should know exactly what’s inside a Docker container before deploying it. Many of the challenges associated with ensuring Docker image security can be addressed simply by following best practices for securing Docker images.

What Are Docker Images?

So first of all, what are Docker images? Simply put, a Docker container image is a collection of data that includes all files, software packages, and metadata needed to create a running instance of a container. In essence, an image is a template from which a container can be instantiated. Images are immutable, which means that once they’ve been built, they cannot be changed. If someone were to make a change, a new image would be built as a result.

Container images are built in layers. The base layer contains the core components of an image and is the foundation upon which all other components and layers are added. Commonly, base layers are minimal and typically representative of common OSes.

Container images are most often stored in a central location called a registry. With registries like Docker Hub, developers can store their own images or find and download images that have already been created.

Docker Image Security

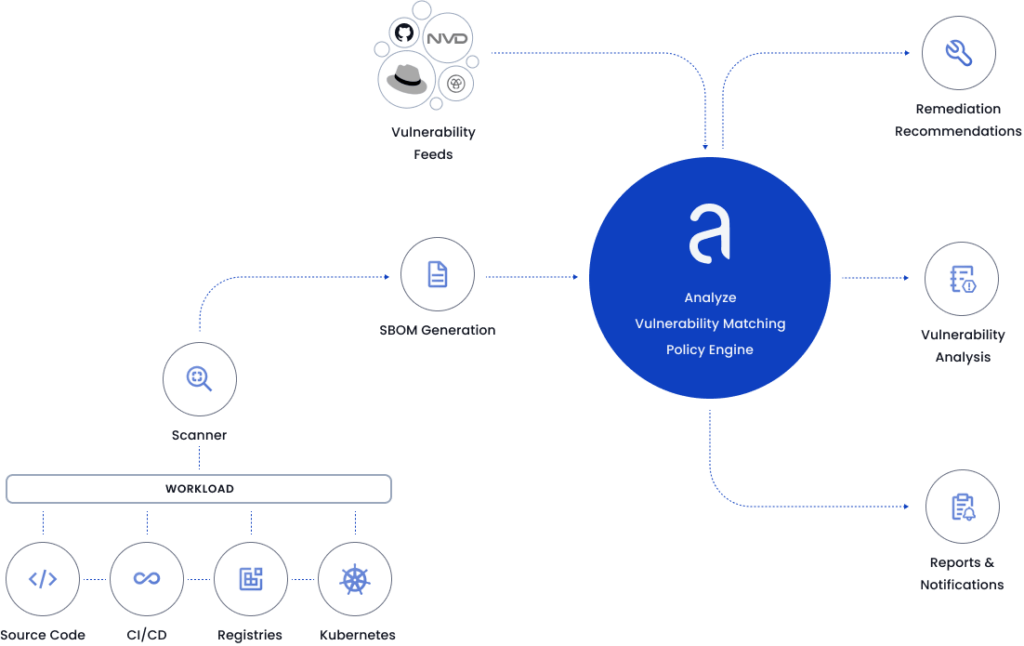

Incorporating the mechanisms to conduct static analysis on your container images provides insight into any potential vulnerable OS and non-OS packages. You can use an automated tool like Anchore to control whether you would like to promote non-compliant images into trusted registries through policy checks within a secure container build pipeline.

Policy enforcement is essential because vulnerable images that make their way into production environments pose significant threats that can be costly to remediate and can damage your organization’s reputation. Within these images, focus on the security of the applications that will run.

Explore the benefits of containerization and how they extend to security in our latest whitepaper.

Docker Image Security Best Practices

The following list outlines some best practices to consider when implementing Docker image security:

1. Continuous Approach

A fundamental approach to securing container images is to automate building and testing. You should set up the tooling to analyze images continuously. For container image-specific pipelines, you should employ tools that are purpose-built to uncover vulnerabilities and configuration defects. Your tooling should give developers the option to create governance around the images being scanned so that based on your configurable policy rules, images can pass or fail the image scan step in the pipeline and not progress further. In short, development teams need a structured and reliable process for building and testing the container images that are built.

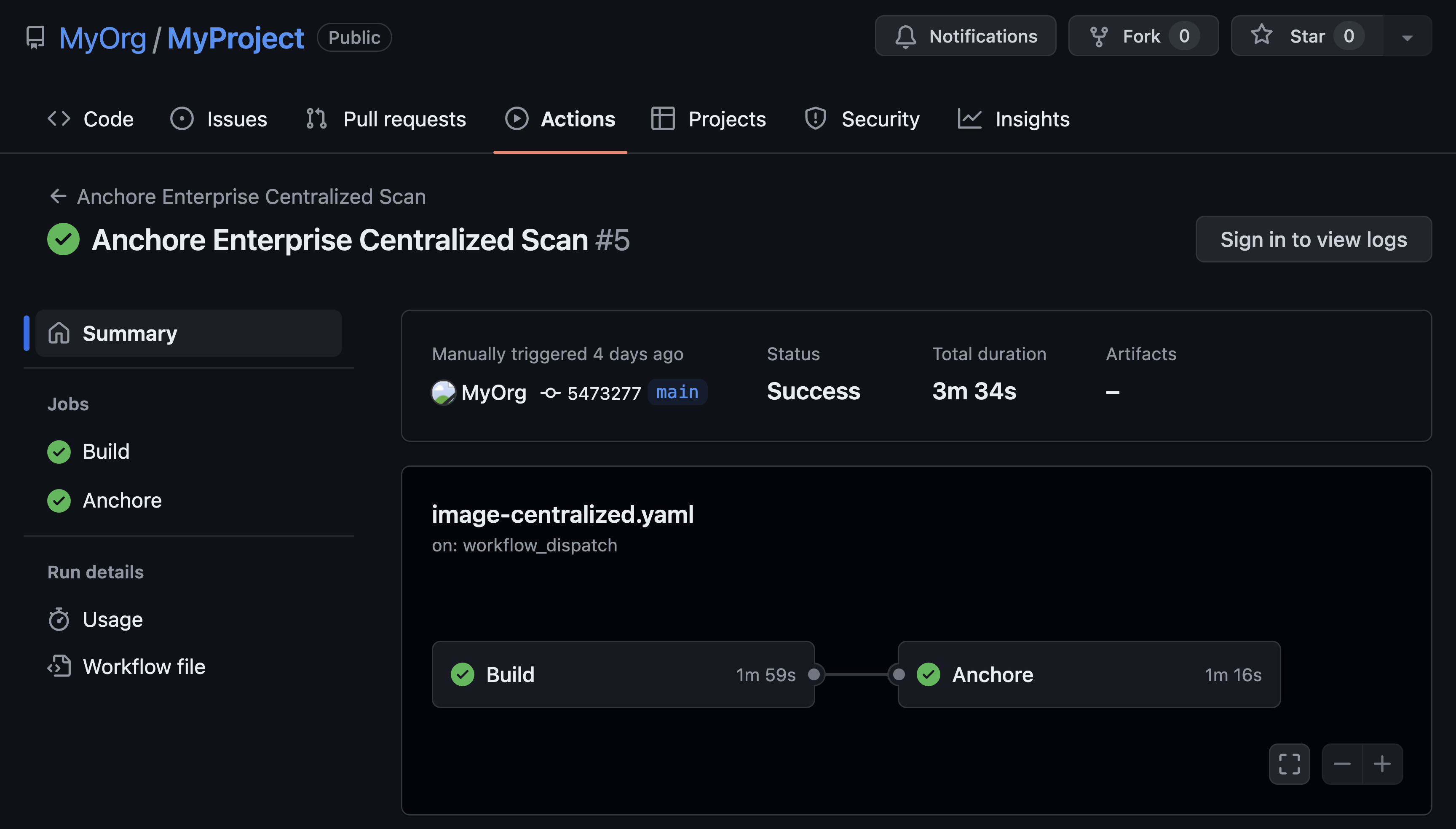

Here’s how this process might look:

- Developer commits code changes to source control

- CI platform builds container image

- CI platform pushes container image to staging registry

- CI platform calls a tool to scan the image

- The tool passes or fails the images based on the policy mapped to the image

- If the image passes the policy evaluation and all other tests defined in the pipeline, the image is pushed to a production registry

2. Image Vulnerabilities

As part of a continuous approach to securing container images, you should scan packages and components within the image for common and known vulnerabilities. Image scanning should be able to uncover vulnerabilities contained within all layers of the image, not just the base layer.

Moreover, because vulnerable third-party libraries are often part of the application code, image inspection and analysis must be able to detect vulnerabilities for OS and non-OS packages contained within the images. Should a new vulnerability for a package be published after the image has been scanned, the tool should retrieve new vulnerability info for the applicable component and alert the developers so that remediation can begin.

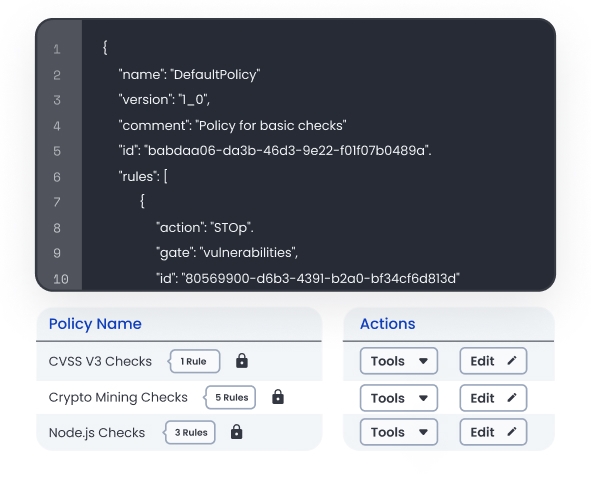

3. Policy Enforcement

You should create and enforce policy rules based on the severity of the vulnerability as defined by the Common Vulnerability Scoring System.

Example policy rule: If the image contains any vulnerable packages with a severity greater than medium, stop this build.

4. Create a User for the Container Image

Containers should be run as a non-root user whenever possible. The USER instruction within the Dockerfile defines this.

5. Use Trusted Base Images for Container Images

Ensure that the container image is based on another established and trusted base image downloaded over a secure channel. Official repositories are Docker images curated and optimized by the Docker community or associated vendor. Developers should be connecting and downloading images from secure, trusted, private registries. These trusted images should be selected from minimalistic technologies whenever possible to reduce attack surface areas.

Docker Content Trust and Notary can be configured to give developers the ability to verify images tags and enforce client-side signing for data sent to and received from remote Docker registries. Content trust is disabled by default.

For more info see Docker Content Trust and Notary. In the context of Kubernetes, see Connaisseur, which supports Notary/Docker Content Trust.

6. Do Not Install Unnecessary Packages in the Container

To reduce container size and minimize the attack surface, do not install packages outside the scope and purpose of the container.

7. Add the HEALTHCHECK Instruction to the Container Image

The HEALTHCHECK instructions directive tells Docker how to determine if the state of the container is normal. Add this instruction to Dockerfiles, and based on the result of the healthcheck (unhealthy), Docker could exit a non-working container and instantiate a new one.

8. Do Not Use Update Instructions Alone in the Dockerfile

To help avoid duplication of packages and make updates easier, do not use update instructions such as apt-get update alone or in a single line in the Dockerfile. Instead, run the following:

RUN apt-get update && apt-get install -y bzr cvs git mercurial subversion

Also, see leveraging the build cache for insight on how to reduce the number of layers and for other Dockerfile best practices.

9. Use COPY Instead of ADD When Writing Dockerfiles

The COPY instruction copies files from the local host machine to the container file system. The ADD instruction can potentially retrieve files from remote URLs and perform unpacking operations. Since ADD could bring in files remotely, the risk of malicious packages and vulnerabilities from remote URLs is increased.

10. Do Not Store Secrets in Dockerfiles

Do not store any secrets within container images. Developers may sometimes leave AWS keys, API keys, or other secrets inside of images. If attackers were to grab these keys, they could be exploited. Secrets should always be stored outside of images and provided dynamically at runtime as needed.

11. Only Install Verified Packages in Containers

Download and install verified packages from trusted sources, such as those available via apt-get from official Debian repositories. To verify Debian packages within a Dockerfile, see Redis Dockerfile.

Implementing Container Image Security

One way to implement Docker image security best practices is with Anchore, a solution that conducts static analysis on container images and evaluates these images against user-defined checks. With Anchore, you can identify vulnerabilities within packages for OS and non-OS components and use policy rules to enforce the image configuration best practices described above.

With Anchore, you can configure policies to check for the following:

- Vulnerabilities

- Packages

- Secrets

- Image metadata

- Exposed ports

- Effective users

- Dockerfile instructions

- Password files

- Files

A popular implementation is to use the open source Jenkins CI tool along with Anchore for scanning and policy checks to build secure and compliant container images in a CI pipeline.

Securing Docker Container Runtime

Docker runtime security is critical to your overall container security strategy. It's important to set up tooling to monitor the containers that are running. If new vulnerabilities get published that are impactful to a particular container, the alerting mechanisms need to be in place to stop and replace the vulnerable container quickly.

The first step in securing the container runtime is securing the registries where the images reside. It’s considered best practice to pull and run images only from trusted container registries. For an added layer of security, you should only promote trusted and signed images into production registries. Vulnerable, non-compliant images should not live in container registries where images are staged for production deployments.

The container engine hosts and runs containers built from container images that are pulled from registries. Namespaces and Control Groups are two critical aspects of container runtime security:

- Namespaces provide the first and most straightforward form of isolation: Processes running within a container cannot see and affect processes running in another container or in the host system. You should always activate Namespaces.

- Control Groups implement resource accounting and limiting. Always set resource limits for each container so that the single container does not hog all resources and bring down the system.

Only trusted users should control the container engine. For example, if Docker is the container runtime, root privileges are required to run Docker commands, and you should exercise caution when changing the Docker group.

You should deploy cloud-native security tools to detect such network traffic anomalies as unexpected traffic flows within the network, scanning of ports, or outbound access retrieving information from questionable locations. In addition, your security tools should monitor for invalid process execution or system calls as well as for writes and changes to protected configuration locations and file types. Typically, you should run containers with their root filesystems in read-only mode to isolate writes to specific directories.

If you are using Kubernetes to manage containers, your workload configurations are declarative and described as code in YAML files. These files can describe insecure configurations that can potentially be exploited by an attacker. It is generally good practice to incorporate Infrastructure as Code (IaC) scanning as part of a deployment and configuration workflow prior to applying the configuration in a live environment.

Why Is Docker Container Runtime Security So Important?

One of the last stages of a container’s lifecycle is deployment to production. For many organizations, this stage is the most critical. Often a production deployment is the longest period of a container’s lifecycle, and therefore it needs to be consistently monitored for threats, misconfigurations, and other weaknesses. Once your containers are live and running, it is vital to be able to take action quickly and in real time to mitigate potential attacks. Simply put, production deployments must be protected because they are valuable assets for organizations whose existence depends on them.

Docker Container Runtime Best Practices

The following list outlines some best practices to follow when implementing Docker container runtime security:

1. Consider AppArmor and Docker

From the Docker documentation:

AppArmor (Application Armor) is a Linux security module that protects an operating system and its applications from security threats. To use it, a system administrator associates an AppArmor security profile with each program. Docker expects to find an AppArmor policy loaded and enforced.

AppArmor is available on Debian and Ubuntu by default. In short, it is important that you do not disable Docker’s default AppArmor profile or create your own customer security profile for containers specific to your organization. Once this profile is used, the container has a certain set of restrictions and capabilities such as network access or file read/write/execute permissions. Read the official Docker documentation on AppArmor.

2. Consider SELinux and Docker

SELinux is an application security system that provides an access control system that greatly augments the Discretionary Access Control model. If it’s available on the Linux host OS that you are using, you can start Docker in daemon mode with SELinux enabled. The container would then have a set of restrictions as defined in the SELinux policy. Read more about SELinux.

3. Seccomp and Docker

Seccomp (secure computing mode) is a Linux kernel feature that you can use to restrict the actions available within a container. The default seccomp profile disables about 44 system calls out of more than 300. At a minimum, you should ensure that containers are run with the default seccomp profile. Get more information on seccomp.

4. Do Not Use Privileged Containers

Do not allow containers to be run with the --privileged flag because it gives all capabilities to the container and also lifts all the limitations enforced by the device cgroup controller. In short, the container can then do nearly everything the host can do.

5. Do Not Expose Unused Ports

The Dockerfile defines which ports will be opened by default on a running container. Only the ports that are needed and relevant to the application should be open. Look for the EXPOSE instruction to determine if there is access to the Dockerfile.

6. Do Not Run SSH Within Containers

SSH server should not be running within a container. Read this blog post for details.

7. Do Not Share the Host’s Network Namespace

When the networking mode on a container is set to --net=host, the container will not be placed inside a separate network stack. In other words, this flag tells Docker not to containerize the container’s networking. This is potentially dangerous because it allows the container to open low-numbered ports like any other root process. Additionally, a container could potentially do unexpected things such as terminate the Docker host. Bottom line: Do not add the --net=host option when running a container.

8. Manage Memory and CPU Usage of Containers

By default, a container has no resource constraints and can use as much of a given resource as the host’s kernel allows. Additionally, all containers on a Docker host share the resources equally and non-memory limits are enforced. A running container begins to consume too much memory on the host machine is a major risk. For Linux hosts, if the kernel detects that there is not enough memory to perform important system functions, it will kill processes to free up memory, which could potentially bring down an entire system if the wrong process is killed.

Docker can enforce hard memory limits, which allow the container to use no more than a given amount of user or system memory. Docker can also enforce soft memory limits, which allow the container to use as much memory as needed unless certain conditions are met. For a running container, the --memory flag is what defines the maximum amount of memory the container can use. When managing container CPU, the --cpu flags give you more control over the container’s access to the host machine’s CPU cycles.

9. Set On-Failure Container Restart Policy

By using the --restart flag when running a container, you can specify how a container should or should not be restarted on exit. If a container keeps exiting and attempting to restart, it could possibly lead to a denial of service on the host. Additionally, ignoring the exit status of a container and always attempting to restart the container can lead to a non-investigation of the root cause behind the termination. You should always investigate when a container attempts to be restarted on exit. Configure the --on-failure restart policy to limit the number of retries.

10. Mount Containers’ Root Filesystems as Read-Only

You should run containers with their root filesystems in read-only mode to isolate writes to specifically defined directories, which you can easily monitor. Using read-only filesystems makes containers more resilient to being compromised. Additionally, because containers are immutable, you should not write data within them. Instead, designate an explicitly defined volume for writes.

11. Vulnerabilities in Running Containers

You should monitor containers for existing vulnerabilities, and when problems are detected, patch or remediate them. If vulnerabilities exist, container scanning should find an inventory of vulnerable packages (CVEs) at the operating system and application layers. You should also implement container-aware tools designed to operate at the same elasticity and agility of containers.

Checks you should be looking for include:

- Invalid or unexpected process execution

- Invalid or unexpected system calls

- Changes to protected configs

- Writes to unexpected locations or file types

- Malware execution

- Traffic sent to unexpected network destinations

12. Unbounded Network Access from Containers

Controlling the egress network traffic sent by containers is critical. Tools for monitoring the inter-container traffic should at the very least accomplish the following:

- Automated determination of proper container networking surfaces, including inbound and process-port bindings

- Detection of traffic flow both between containers and other network entities

- Detection of network anomalies, such as port scanning and unexpected traffic flows within your organization’s network

A Final Word on Container Security Best Practices

Containerized applications and environments present additional security concerns not present with non-containerized applications. But by adhering to the fundamentally basic concepts for host and application security outlined here, you can achieve a stronger security posture for your cloud-native environment.

And while host security, container image scanning, and runtime monitoring are great places to start, adopting additional security best practices like scanning application source code (both open source and proprietary) for vulnerabilities and coding errors along with following a policy-based compliance approach can vastly improve your container security. To see how continuous security embedded at each step in the software lifecycle can help you improve your container security, request a demo of Anchore.